AI Models Face Off in Smart Contract Security Testing Battle Orchestrated by OpenAI

In collaboration with Paradigm and Ottersec, OpenAI has unveiled the EVMbench research paper, which examines the capabilities of various AI models in identifying, fixing, and exploiting security flaws within smart contracts.

A new benchmark has been introduced by OpenAI to assess the performance of various AI models in identifying, repairing, and potentially exploiting security flaws present in cryptocurrency smart contracts.

The research paper titled "EVMbench: Evaluating AI Agents on Smart Contract Security" was published by OpenAI on Wednesday, working alongside cryptocurrency investment company Paradigm and blockchain security company OtterSec. The study examined the theoretical exploit potential of AI agents across 120 smart contract security vulnerabilities.

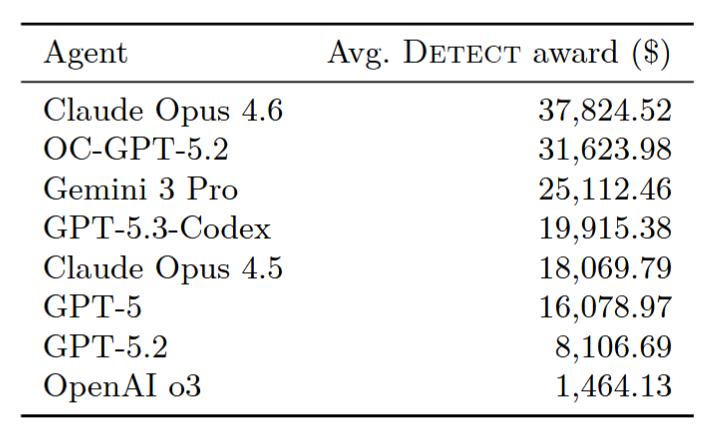

The top performer was Anthropic's Claude Opus 4.6, which achieved an average "detect award" valued at $37,824. OpenAI's OC-GPT-5.2 secured second place, while Google's Gemini 3 Pro took third, earning $31,623 and $25,112, respectively.

As AI agents demonstrate growing proficiency in executing fundamental tasks, OpenAI emphasized the increasing necessity to assess their capabilities within "economically meaningful environments."

"Smart contracts secure billions of dollars in assets, and AI agents are likely to be transformative for both attackers and defenders."

"We expect agentic stablecoin payments to grow, and help ground it in a domain of emerging practical importance," OpenAI added.

On Jan. 22, Circle CEO Jeremy Allaire made a prediction that within five years, billions of AI agents will be conducting transactions using stablecoins for routine payments on users' behalf. Similarly, former Binance chief Changpeng "CZ" Zhao recently suggested that cryptocurrency would become the "native currency for AI agents."

The importance of testing agentic AI capabilities in identifying security vulnerabilities is underscored by the fact that attackers managed to steal $3.4 billion worth of cryptocurrency funds in 2025, representing a slight uptick compared to 2024.

The EVMbench utilized 120 carefully selected vulnerabilities extracted from 40 smart contract audits, with the majority of these sourced from open-source audit competitions. According to OpenAI, the benchmark is intended to assist in monitoring AI advancement in detecting and addressing smart contract vulnerabilities on a large scale.

Smart contracts weren't built for humans: Dragonfly

Writing on X on Wednesday, Dragonfly's managing partner Haseeb Qureshi stated that crypto's vision of replacing property rights and legal contracts never came to fruition, not due to technological shortcomings, but because it was never designed with human intuition in mind.

According to Qureshi, signing large transactions still feels "terrifying," especially with the constant presence of drainer wallets and similar threats, while bank transfers seldom trigger the same level of anxiety.

Qureshi instead envisions the future of crypto transactions being enabled by AI-intermediated, self-driving wallets, which will handle these threats and execute complex operations for users:

"A technology often snaps into place once its complement finally arrives. GPS had to wait for the smartphone, TCP/IP had to wait for the browser. For crypto, we might just have found it in AI agents."