$1.78M Moonwell Security Breach Sparks Controversy Over AI-Generated Smart Contract Code

A critical mispricing error valued cbETH at $1.12 rather than its actual $2,200 price, enabling attackers to drain $1.78 million from the Moonwell protocol and fueling concerns about AI-assisted smart contract development.

A decentralized finance lending protocol called Moonwell, which operates on both Base and Optimism networks, suffered a security breach resulting in approximately $1.78 million in losses when a pricing oracle for Coinbase Wrapped Staked ETH (cbETH) erroneously reported a valuation of approximately $1.12 rather than the correct price of around $2,200, generating a severe pricing discrepancy that malicious actors exploited for financial gain.

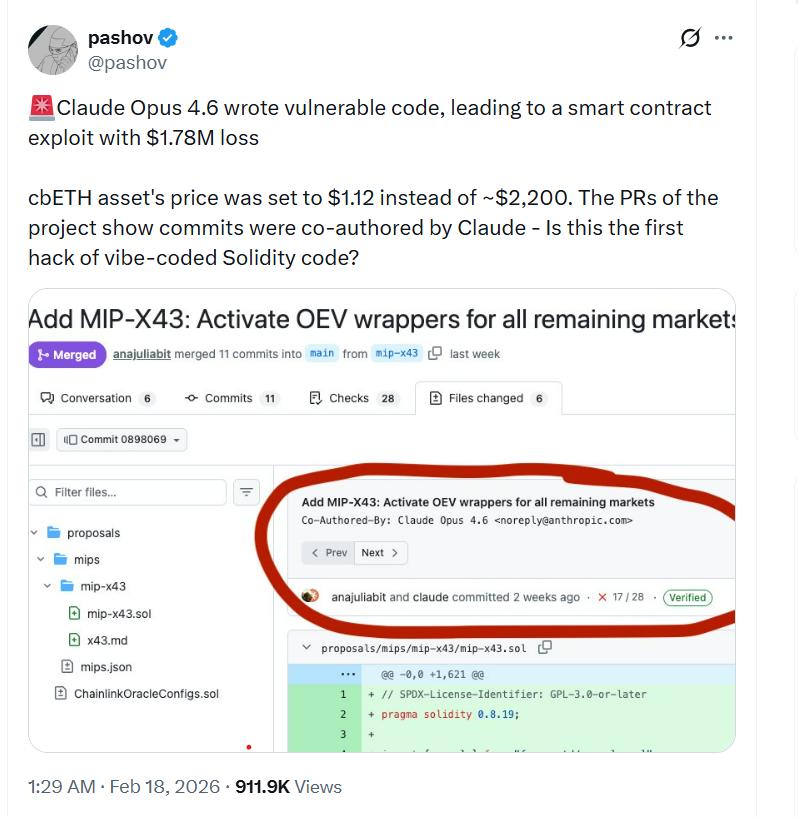

Examination of the pull requests associated with the compromised contracts reveals several commits bearing co-authorship credits to Anthropic's Claude Opus 4.6, leading security auditor Pashov to publicly highlight this incident as a cautionary example of artificial intelligence-generated or AI-supported Solidity code producing unintended vulnerabilities.

In a conversation with Cointelegraph regarding the security breach, he explained that he had connected the case to Claude due to the presence of multiple commits within the pull requests that showed Claude as a co-author, indicating that "the developer was using Claude to write the code, and this has led to the vulnerability."

However, Pashov urged caution against characterizing the vulnerability as exclusively AI-generated. He characterized the oracle problem as the type of error "even a senior Solidity developer could have made," contending that the underlying issue stemmed from insufficient rigorous verification procedures and comprehensive end-to-end validation processes.

At first, he stated that he suspected no testing or auditing had been conducted whatsoever, but subsequently recognized that the development team indicated they had performed unit and integration tests in a separate pull request and had secured an audit from Halborn.

From his perspective, the pricing error "could have been caught with an integration test, a proper one, integrating with the blockchain," though he chose not to directly criticize other security companies.

Small loss, big governance questions

The financial magnitude of this exploit is relatively modest when measured against some of DeFi's most catastrophic incidents, including the Ronin bridge exploit in March 2022, where malicious actors made off with over $600 million, or numerous other nine-figure bridge and lending protocol security breaches.

What distinguishes the Moonwell incident is the combination of AI co-authorship involvement, a seemingly elementary price configuration error on a prominent asset, and the presence of existing audits and testing protocols that nevertheless failed to identify the vulnerability.

Pashov indicated his own firm would not fundamentally alter its methodology, but if code displayed characteristics of being "vibe coded," his team would "have a bit more wide open eyes" and anticipate a greater concentration of easily identifiable issues, although this specific oracle vulnerability "was not that easy" to detect.

"Vibe coding" vs disciplined AI use

Fraser Edwards, co-founder and CEO of cheqd, a decentralized identity infrastructure provider, told Cointelegraph that the discussion surrounding vibe coding obscures "two very different interpretations" of artificial intelligence utilization.

On one hand, he explained, there are non-technical founders prompting AI to produce code they lack the expertise to independently evaluate; on the other hand, seasoned developers leverage AI to expedite refactors, pattern exploration, and testing within a sophisticated engineering workflow.

AI-supported development "can be valuable, particularly at the MVP [minimal viable product] stage," he observed, but "should not be treated as a shortcut to production-ready infrastructure," particularly in capital-intensive systems like DeFi.

Edwards contended that all AI-produced smart contract code should be handled as untrusted input, requiring strict version control, transparent code ownership, multi-person peer review, and comprehensive testing, especially surrounding high-risk areas such as access controls, oracle and pricing logic, and upgrade mechanisms.

"Ultimately, responsible AI integration comes down to governance and discipline," he said, with clear review gates, separation between code generation and validation, and an assumption that any contract deployed in an adversarial environment may contain latent risk.